A powerful and controversial new world of network analysis exists by the name of Deep Packet Inspection (DPI). While the technology to achieve this level of visibility has existed for decades, its only now that the implementations have evolved to allow large amounts of data to be inspected in a real time on fairly modest budgets. With Edward Snowden files opening our eyes to government data collection programs, DPI has gone from networking jargon to front page news.

Before diving into Deep Packet Inspection, let’s create some context by looking at how packets are constructed and a little bit about firewall history.

IP Packet

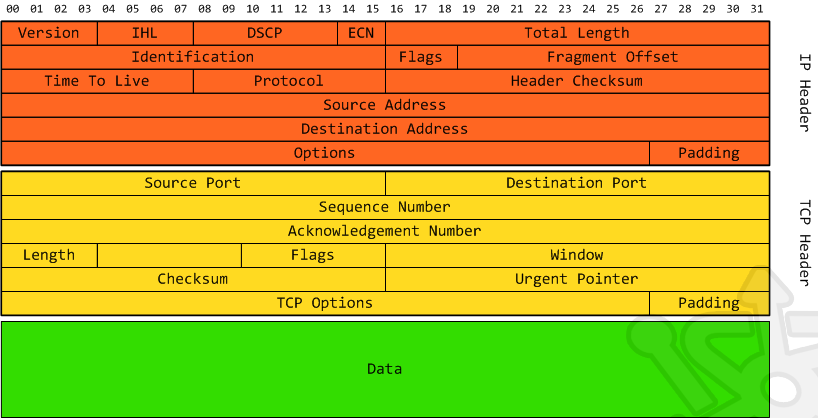

Simply put, a network packet is a means by which data is packaged to be transferred from one host to another. The diagram below shows the structure and fields contained in an IPv4 packet. The IP header deals with getting the packet from one host to another, while the TCP header tracks the state of the TCP communication between the two hosts. The data (or payload) contains information we want to transfer. Now this is a simplistic view, but it should provide the scope so that we can take a deeper look into the packet structure. This post will also reference layers of the OSI model, so if you need a crash course check out my earlier post here.

IP Packet Header

Let’s take an ever so brief look at the fields contained in the first header of a packet. Once again we’re not going to do justice to the complexity of the IP header, but to keep us on track I’ll discuss the main functionality of each field.

- Version – The format of the IP packet header. Commonly this will be 4 for IPv4. If you’re ahead of the curve you may encounter IPv6 traffic.

- IP Header Length (IHL) – Number of 32 bit words in the header, or better yet the length of the packet header.

- Differentiated Services Code Point (DSCP) – Used for real-time communications, such as VoIP. This field was once called Type of Service (ToS)

- Explicit Congestion Notification (ECN) – A method by which a router can indicate congestion without the recipient having to find out through dropped packets.

- Total Length – Provides the size of the packet, including the header and the data.

- Identification – Uniquely identifies the packet. Used when fragments are reassembled. Each successive packet will have an incremental identifier.

- Flags – Indicates whether a router is allowed to fragment the datagram.

- Fragmentation Offset – If fragmentation is used, this field indicates the offset from the start of the datagram.

- Time To Live (TTL) – Number of hops before the packet expires.

- Protocol – Indicates the the next encapsulated protocol. In our case we’ll be using TCP.

- Header checksum – Used for error detection. The value is generated by applying an algorithm to all the fields in the header.

- Source Address – Sender of the packet.

- Destination Address – Final destination of the packet.

- Options – Extra options. Variable length.

- Padding – Adds extra bits to make packet length a multiple of 32 bits

TCP Header

Following the IP header we have the transport layer header. In our case we’ll look at the TCP header.

- Source Port – Indicates the port from which the datagram was generated.

- Destination Port – The port the segment is destined on the destination host.

- Sequence Number – Position of the first data byte in the segment.

- Acknowledge Number – The next data byte expected by the receiving host.

- Length – Indicates the length of the transport layer header.

- Flags – Field that directly controls the flow of the packet. Commonly known as the control bit. This field is particularly interesting and I suggest researching this field if you are not already familiar with it.

- URG – Prioritize data.

- ACK – Acknowledges received packet.

- PSH – Immediately push data.

- RST – Aborts a connection.

- SYN – Initiated a connection.

- FIN – Closes a connection.

- Window – Amount of data that can be accepted.

- Checksum – Used for error detection.

- Urgent Pointer – Points to the end of the urgent data.

- Options – As with the IP header we have additional options, particularly used in conjunction with the SYN flag.

- Padding – Once again, pad the header.

Packet Data

Following the packet header you have the data. This is the information we’re looking to transfer from one host to another. As most data is too large to send in a single packet, it is broken up into pieces (fragments) and sent in subsequent packages.

The Bits

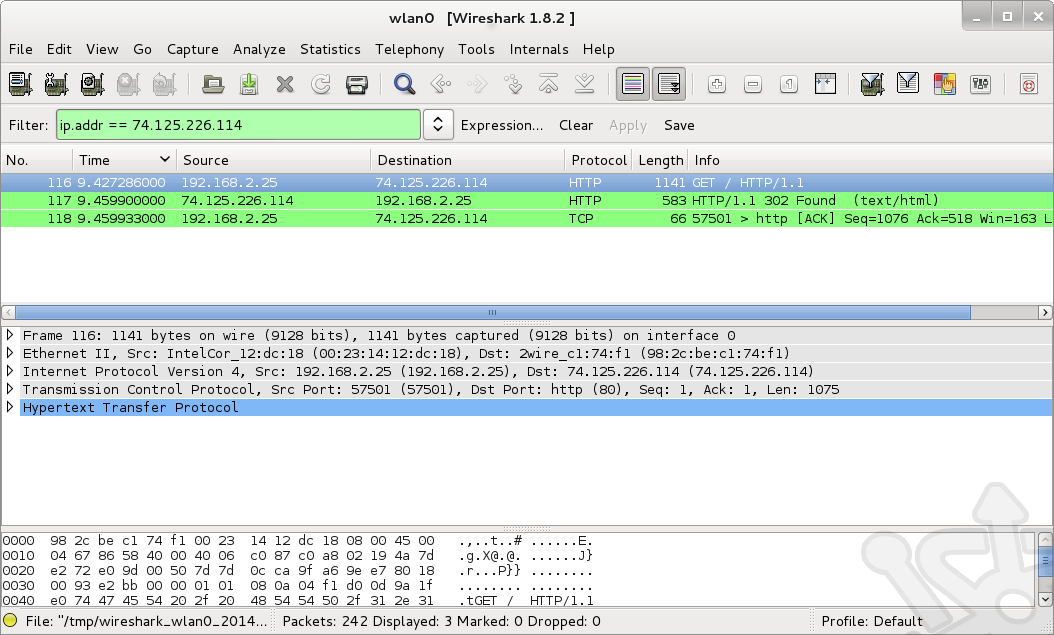

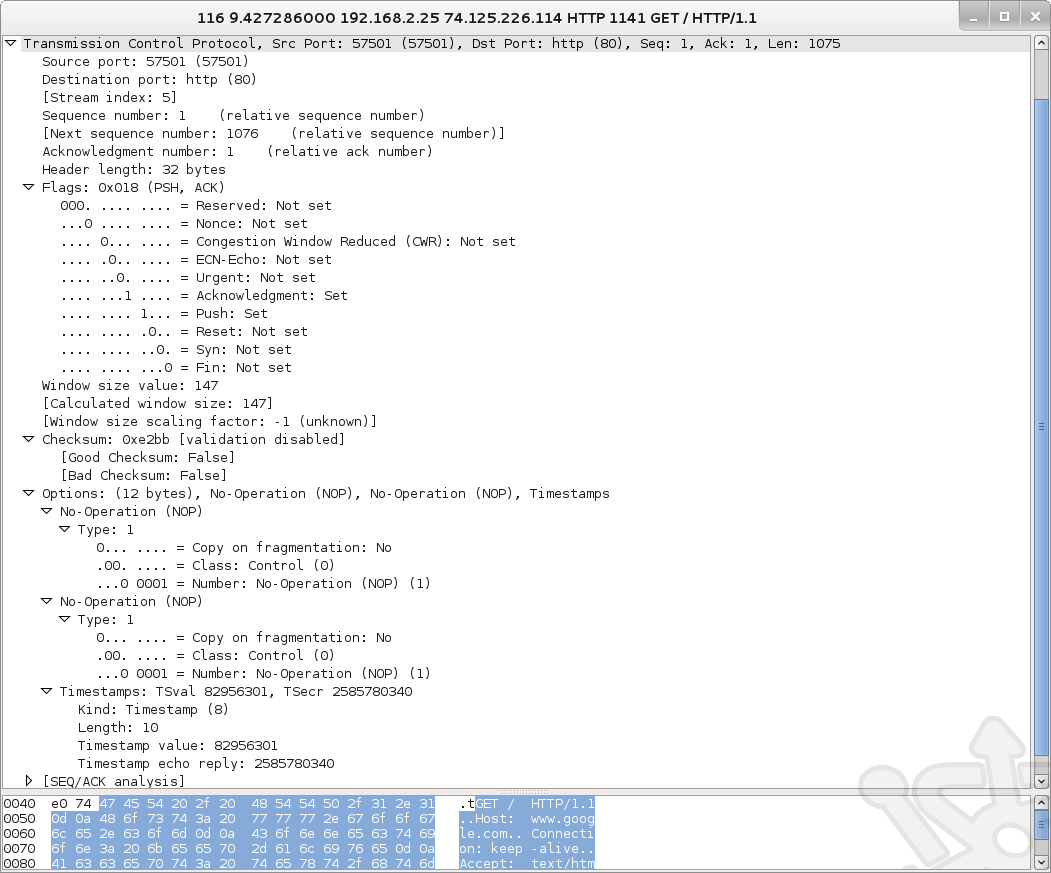

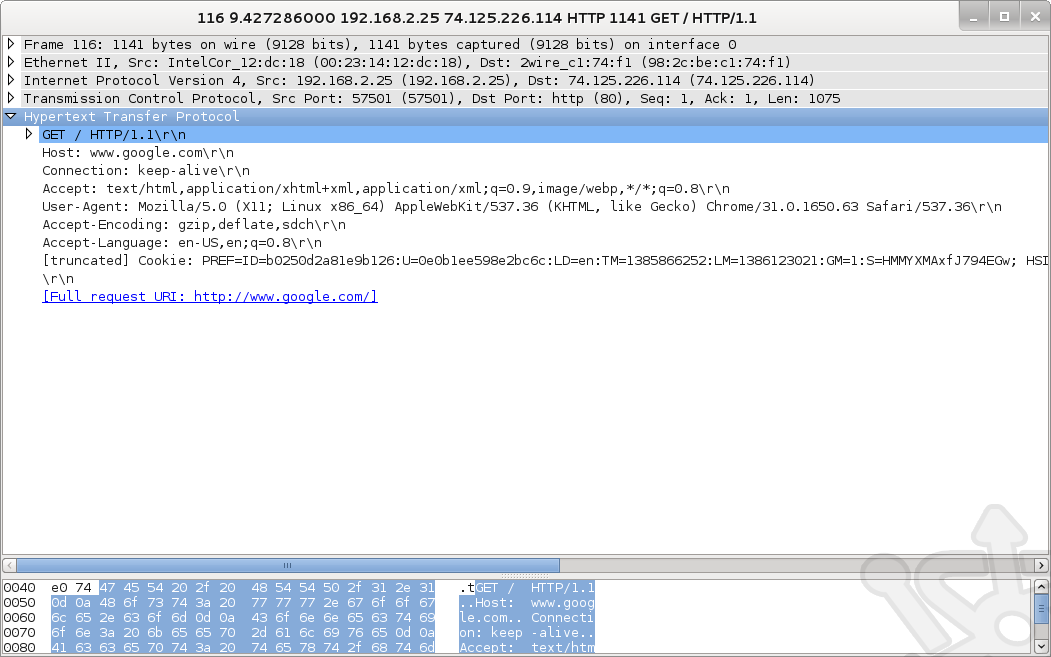

While this is all well and good, what does it look like in the wild? For this we’ll turn to our favorite program Wireshark and have a look at some of these fields in traffic.

With Wireshark capturing traffic I’ve also pulled up a web browser and navigated to google.com. Let’s take a look at the GET request.

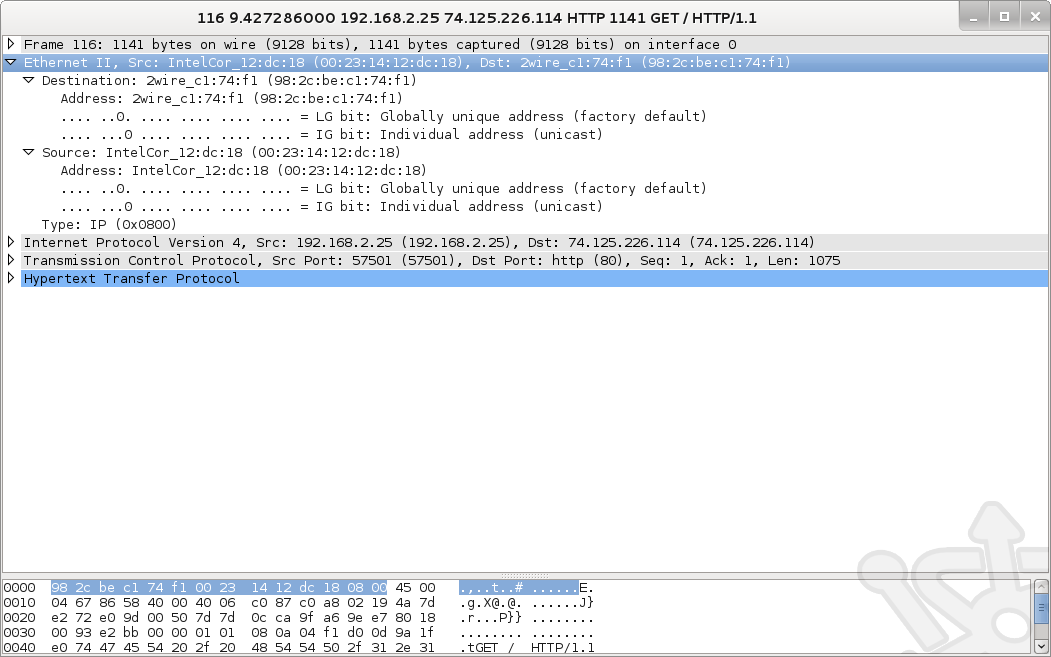

Expanding the Ethernet II node we see references to mac addresses. This is the encapsulation of the packet used for transport on the data link layer (layer 2). We didn’t cover frame encapsulation, but its important to know that an IP packet traversing the network will be framed. In our case the source mac is my laptop and the destination the local router.

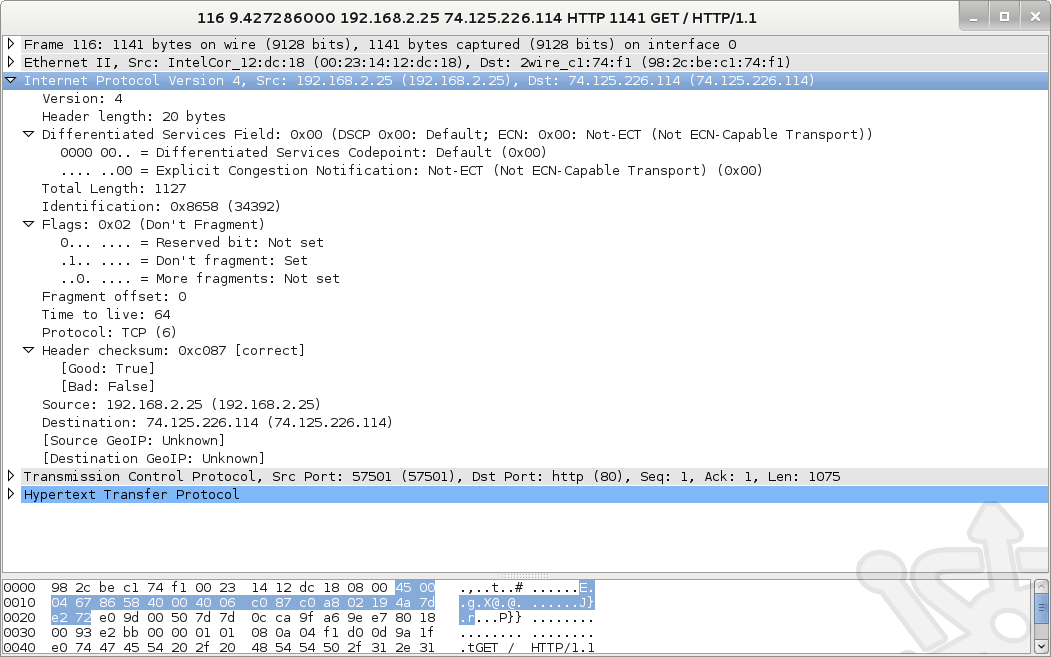

Once we look past the frame, the first thing we come across is the IP Packet Header. Immediately we see the fields we referenced above such as Version, Header length, Differentiated Service Field as well as the source and destination IP addresses.

Past the IP header we find the TCP Header. Once again we can easily identify the fields labelled in our diagram above such as Source and Destination ports, Sequence and Acknowledgement number as well as the Flags.

Past the TCP Header we finally come across the data. Being that we’re looking at a GET request, this will be the actual request made by my laptop the Google website.

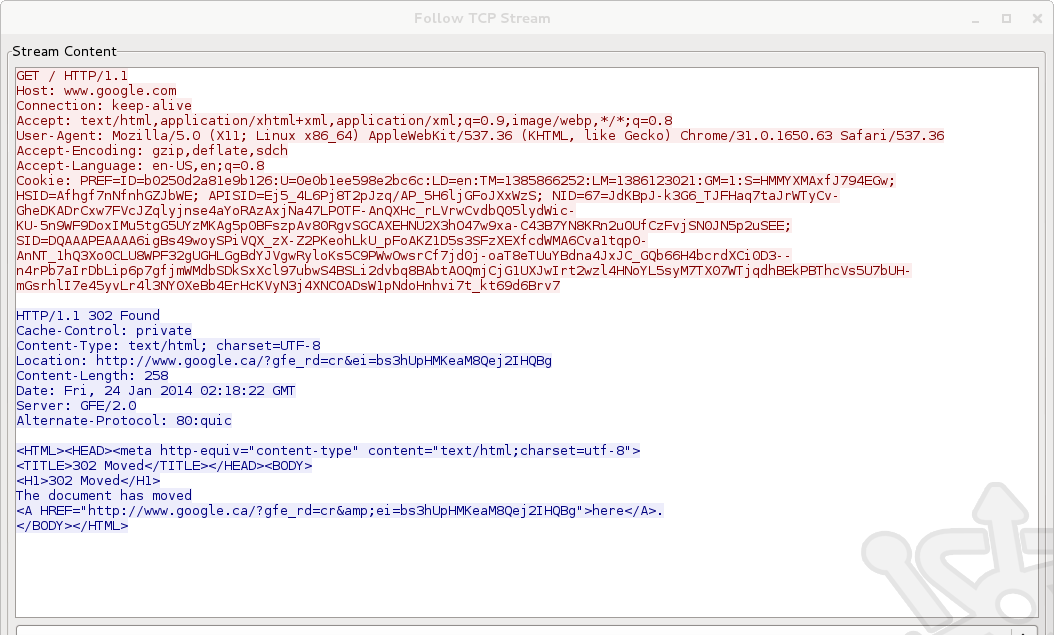

If we follow the stream we’ll see how Wireshark uses the Sequence and Acknowledgement number fields (among others) to reconstructs the data into a format readable by the higher levels of the OSI model. Taking this look into one simple web request puts into perspective the impressive task done by Deep Packet Inspection. Even after reading and evaluating all the headers above, the DPI device will collect and reassemble data streams to evaluate and act upon. But we’re jumping ahead.

Firewall History

Now that we’ve taken a quick look at packets, let’s discuss firewalls. So what is a firewall? At its core, its a device that controls the flow of packets. Packets are permitted or denied when the firewall inspects the various parts of the packet and compares them against preconfigured rules.

The first generation of firewalls are generally considered stateless, meaning that they are not aware of the context of the packets they are handling. Each packet is handled independent of the previous as and such have no way of knowing whether the packet being processed belongs to an existing connection. Stateless firewalls operate at layer 3 and only inspect the IP header of a packet.

A stateful firewall performs what is often termed shallow packet inspection. As well as looking at the IP header it will analyze the information at layer 4. Therefore stateful firewalls are protocol (TCP/UDP) aware and are able to see the sequence number of the packets traversing their interfaces. As the firewall knows the state of the conversation it knows whether a packet is a new conversation or part of an existing one. With this information the firewall can make a better informed decision as to whether this traffic should be permitted.

In the third generation we have application firewalls which provide, insight into layer 7. Modern application firewalls have greater control over the application layer giving us the topic of our post, Deep Packet Inspection. In addition, many of these application firewalls combine additional services such as Intrusion Protection Systems (IPS) in what are termed Next Generation Firewalls (NGFW) or Unified Threat Management (UTM) devices.

Deep Inspection

So what is Deep Packet Inspection exactly? Deep inspection is the process of looking beyond the usual IP Packet, and TCP header and evaluating the packet payload as well. Effectively, firewalls with DPI have the ability to evaluate and take action based on layers 2 through 7. This insight into layer 7 is what makes DPI so powerful. No longer are firewalls making decisions based on the limited information available in the headers, but now can look at the actual data itself to determine whether it should be permitted.

Not that long ago a stateful firewall was enough. You could analyse the IP and TCP header and have a good idea of the data the you were dealing with. It was just a case of looking at the protocol and deciding whether to allow or deny it. The ubiquity of HTTP has lead to the development of increasingly sophisticated applications that straddle the lines between protocols. Today port 80 is no longer just the home to GET and POST requests and instead contains whole gamut of different communications. You’re no longer able to trust the traffic you’re allowing is safe or appropriate based on the headers alone. Another example of this is the File Transfer Protocol (FTP). The fact that everything in the computerized world eventually boils down to a file makes unfettered access to transfer files a huge liability. As such, many organizations have put in place strict rules limiting or completely blocking FTP traffic. It would be simple enough to block outbound traffic to port 21 and be done with it. But what happens when the FTP server uses a non-standard port? What happens if the user doesn’t use FTP at all, but instead tries to upload a file to a website? Deep Packet Inspection gives you the ability to look at the payload instead or trusting in the port. In theory you have the ability to say that you don’t want FTP on any port; you don’t want files matching a specified regular expression to be uploaded to any website.

Sounds great right? Well, that is only within the limitations of your device’s ability to make sense of the data being transmitted. At the moment this is the battleground between vendors. The technology has advanced to a level that makes DPI feasible for organizations of any size and now each of the vendors is working hard to map out payloads. Its one thing to be able to see what’s in the payload, its quite another to quickly make sense of what’s being seen and act upon it in real time. In this way, it seems that firewall vendors will operate more like our anti-virus and anti-malware vendors where their product will only be as good as their last payload signatures.

DPI already has many uses today and as payload signatures get more accurate firewall makers and network administrators are only limited by their own creativity to leverage them. Some of the major applications for DPI today are as follows:

- Traffic shaping – Limiting or outright blocking of specific traffic.

- Ensuring service levels – Tied in with traffic shaping, an administrator can ensure priority traffic is properly treated as such.

- Rogue applications – Just because its not running on port 21, doesn’t mean it isn’t an FTP service. DPI allows for the identification of services on non-standard ports and if necessary block them.

- Malware/virus – Visibility into the payload allows for blocking of infectious programs before they even reach the host.

- Intrusion – Additionally to malware and viruses, you can also undercover intrusion whether it be through backdoors, injections and attempted overflows.

- Targeted advertising – While not most people’s favorite application for DPI, it has been used to track user’s browsing habits allowing customized ads. A few companies have even gone out of business trying (see NebuAd).

- Data loss prevention – Identify key pieces of information which can then be blocked from being transmitted outside the organization.

Concerns

No doubt the ability to look deep into packets is a great tool for administrators hoping to keep the networks running at their best, but some of this visibility has raised concerns with the privacy and net neutrality advocates. As mentioned above the same technology that helps protect our networks and maintain the quality of service can also be used at the detriment of the end user.

Its worth noting that there is a distinction between the use of Deep Packet Inspection in the corporate versus home environment. Specifically there is an understanding (and often a written consent) to say that you will curtail your internet usage to work related activity when you’re sitting in the office. As such there is a knowledge that your internet traffic is being controlled in some way while you’re at work. On the other hand, when you’re in your own home you expect privacy in your browsing habits. Many of the concerns raised about DPI deployment are often in respect to their usage by ISPs where your private web browsing could be subject to monitoring or shaping without your knowledge or explicit consent.

Traffic shaping represents one area that has been raised as a concern, mostly because its directly noticeable and measurable by the end user. Its often seen in its implementation by ISPs in their attempt to manage the amount of traffic on their network. By targeting high bandwidth applications and throttle the amount of traffic these applications can use they can maintain a quality of service across their network. In the past companies like Verizon and Comcast have faced the ire from the FCC for some of their traffic shaping practices. Famously in 2008 the FCC ruled that Comcast was wrong in limiting BitTorrent traffic and had the company commit to working to find a better way to manage their infrastructure without putting specific limitations on end users. Despite this win for net neutrality advocates, just this week Washington’s appeals court struck down rules the FCC put into place guaranteeing users unfettered access to the internet. In addition to peer-to-peer services, other the popular sites of the day like Netflix and YouTube have found themselves victims of traffic throttling. In some cases the accusation has been made that ISPs are purposely throttling streaming media sites in order to better place their own service in the market.

Privacy represents the larger worry to most people. The fact that data can be read in real time means data could be stolen unbeknownst to the end user. A common counter argument to this is that many of today’s services and incorporating SSL into their solutions. Unfortunately this is of little consolation when you see the offerings put forth by many security manufacturers promising easy man-in-the-middle attacks to break into SSL. You need only read through a few of the WikiLeaks whitepapers to see the sophisticated certificate re-signing solutions which allow these devices to intercept and decrypt communications while not disrupting so-called secure connections. Combine Deep Packet Inspection with re-signing techniques and its just about the same as sending everything in plain text.

The truth is any attempts to prevent the advancement of technology will ultimately fail and the Luddite will invariably lose. In the end its is a question of policy not technology. The battle should not be to prevent Deep Packet Inspection, but ensure its responsible use.

Great article, concise and well written. Congratulations.